How to Utilize Large Language Models (LLMs) in Your Next Data Analysis Project

Welcome to another edition of “In the Minds of Our Analysts.”

At System2, we foster a culture of encouraging our team to express their thoughts, investigate, pen down, and share their perspectives on various topics. This series provides a space for our analysts to showcase their insights.

All opinions expressed by System2 employees and their guests are solely their own and do not reflect the opinions of System2. This post is for informational purposes only and should not be relied upon as a basis for investment decisions. Clients of System2 may maintain positions in the securities discussed in this post.

Today’s post was written by Aquiba Benarroch.

Like almost every other industry, the world of finance is rapidly embracing the transformative power of Artificial Intelligence (AI), particularly Large Language Models (LLMs). Integrating these advanced technologies is no longer just a competitive edge—it's becoming essential. Financial firms that do not leverage AI's potential risk falling significantly behind in an increasingly tech-driven landscape.

The beauty of today's AI revolution lies in its accessibility. Most AI models, notably the highly acclaimed ones from OpenAI, Google, and Anthropic, are available through paying APIs. Other models, like Mistral or published in HuggingFace, offer open-source (free) access. The cost-effectiveness of these models is remarkable. With OpenAI’s API, for instance, each call incurs a minimal fee – mere cents – which, despite potentially adding up in extensive use cases, underscores the affordability of cutting-edge AI technology.

What's truly remarkable is the paradigm shift these technologies bring. Users, including those in the financial sector, can now outsource the intricate task of developing AI models to industry experts like OpenAI. This means we can focus on leveraging these models in our applications without getting bogged down in the complexities of AI/ML development.

In this post, I aim to share insights into the remarkable accessibility and user-friendliness of these models. One key takeaway is the simplicity of integrating this technology into our analytical processes; it takes about 10 lines of code to incorporate the power behind ChatGPT into our analysis.

However, the real challenge lies elsewhere. It's not just about the technical integration but about mastering prompt engineering, applying the technology to the right kind of analysis, and, most critically, drawing accurate and insightful conclusions. At the end of the day, these models are sophisticated tools, and like any tool, their effectiveness hinges on the skill and knowledge of the user.

Case Study: Analysis of Customer Surveys and Reviews

In a recent project, I leveraged the OpenAI API to delve into customer surveys and reviews for a set of restaurants. The challenge was to process and make sense of a massive amount of unstructured data – a task perfectly suited for AI.

We could efficiently analyze thousands of customer review entries using the API, categorizing sentiments (positive, negative, or neutral) and identifying key themes. This not only provided a clear picture of customer satisfaction and areas for improvement but also offered insights into new service opportunities.

Beyond basic sentiment analysis, we employed prompt engineering to extract quantitative measures from the data. We could determine how frequently certain themes were mentioned in the reviews by crafting specific prompts. This approach allowed us to quantify aspects that traditionally require complex Natural Language Processing (NLP) algorithms or regular expressions (regex) parsing.

The end result was a list of the top 5 themes for positive and negative reviews ranked by the number of times the theme appeared in reviews, as illustrated in the following visualization:

This structured data provides actionable insights, enabling analysts to understand the areas that have the greatest impact on customer satisfaction.

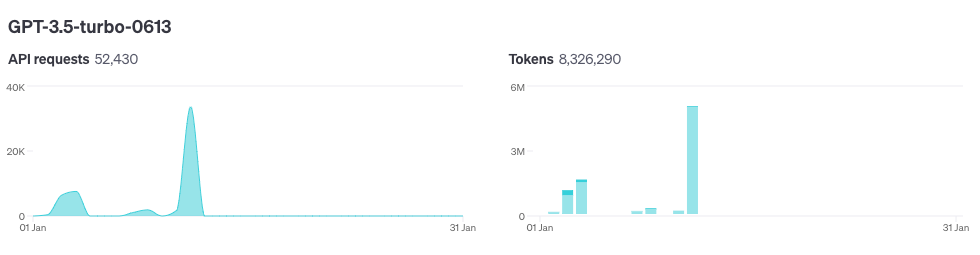

In terms of cost-efficiency, the OpenAI API proved to be remarkably economical. For the scope of our project, we utilized the GPT-3.5 Turbo model, which entailed over 52,000 API requests. Despite the high volume of requests and the processing of over eight million tokens sent via these API calls, the total expenditure amounted to just $12.75. This included the generation of 50,000 tokens, which formed the basis of our analysis.

Technical Insight: Implementing AI with Python

For those interested in the technical side, implementing AI-driven analysis is surprisingly straightforward, thanks to Python and OpenAI's user-friendly API. Let's take a brief look at how this works in practice.

To start, we set up the OpenAI client in Python:

This snippet initializes the connection to OpenAI's services, using your organization and API key for access.

Next, we use a simple yet powerful method to get ChatGPT-like responses:

In these lines, we're essentially instructing the API to process our input (customer reviews, Reddit posts, or any text data) and provide a tailored response based on the given model and prompt.

Understanding the Code

model parameter

For our case study, the model of choice was gpt-3.5-turbo. This selection was strategic; gpt-3.5-turbo is a cost-effective option that excels in understanding and processing natural language, making it highly suitable for analyzing customer feedback and social media posts.

However, it's important to acknowledge certain limitations. One such limitation is the context window limit, which refers to the amount of text the model can process in a single query. For gpt-3.5-turbo, this limit is 4,096 tokens, approximately 1,000 words. This means that while the model is adept at handling substantial amounts of data, each query needs to be within this token limit.

This presented a unique challenge in our case study: we couldn't simply feed thousands of reviews into the system in one go. Instead, we had to design a method to break up the data thoughtfully. This approach ensured we could extract the necessary insights and stay within the model’s constraints. For more information about this and other models, you can visit OpenAI's model descriptions.

messages parameters

In our Python code, the instruction and prompt variables play a critical role, and they are incorporated within a list named messages. This list is essential for structuring our interaction with the AI. Here's a breakdown:

messages Comprise a series of message objects, each designated with a specific role – 'system' or 'user', and their respective content.

{"role": "system", "content": instruction}: The**system**role is used to set the context or provide specific instructions to the AI. This is akin to giving ChatGPT custom instructions. For instance, in our customer review analysis, the**instruction**could be structured to instruct the AI to identify sentiment trends or key themes from the reviews. The default is a generic instruction like “You are a helpful assistant.”{"role": "user", "content": prompt}: Under the**user**role, we input the actual content or query for the AI to process. In practical terms,**prompt**is the raw data or the specific question you pose to the AI. Continuing with our example,**prompt**would be the actual customer review text or a specific Reddit post you want to be analyzed.

max_tokens parameter (optional)

A token can be more complex than a word in AI language processing. On average, a token represents about four characters, which could be an entire word, part of a word, or even punctuation. This granularity is important as the max_tokens limit impacts how much data we get from a single query. Strategically working within this limit is crucial for effectively using the API.

The max_tokens parameter also plays a vital role in cost optimization. By limiting the number of tokens returned from an API call, we can manage both the scope of the AI's response and the associated costs. For instance, in analyzing sentiment from customer reviews, if we tailor our instruction or prompt only to expect responses like "Positive", "Negative", or "Neutral", we can set the max_tokens to 4 or 5. This aligns the response length with our anticipated answer, ensuring efficiency in both processing and cost.

temperature parameter (optional)

Another crucial aspect of working with AI models like gpt-3.5-turbo is the temperature setting. In our code, you'll notice that the temperature is set to a low value of 0.1. This setting is intentional and plays a vital role in the accuracy of the output.

The temperature parameter controls the level of randomness in the AI's responses. A lower temperature means the responses will be more deterministic and less prone to hallucinations. In the context of financial analysis and customer review sentiment analysis, precision is key. We want to ensure that a positive review is consistently recognized as positive, and similarly, any financial data is interpreted with the highest level of accuracy.

It's worth noting that we can pass several more parameters and options to the OpenAI API call, which can further refine and tailor the AI's responses to our specific needs. These are beyond the scope of this post, but I encourage those interested to delve deeper into the possibilities. For more information on these parameters, you can visit OpenAI's API documentation.

Conclusion

In the ever-evolving world of finance, the analyst's role is not just to gather data but to derive smarter, more insightful conclusions from it. Tools like OpenAI's API are revolutionizing how we approach this task, offering unprecedented capabilities in processing and analyzing information. However, the true power of these tools lies not only in their accessibility but in our ability to apply them appropriately.